The 10 Best DataGrab Sites of 2023 This post will talk about the best and most difficult options for DataGrab. You can use DataGrab, an online data scraping tool, to get URLs from websites and their content. It can get a lot of data from the internet at once and download web pages for development on your computer. It was made quick, simple, and good at saving answers. You can locate their products and prices using it. You may quickly send DataGrab’s data to a.csv file to finish the job. This makes it simpler for you to compare your products to your competitors.

Even websites that prevent you from sharing their database for use now or in the future can benefit from the information you can get from them. Access to the data is easy, and it is instantly gathered from all over the internet. The biggest database of blogs, news, videos, and conversations is available through DataGrab. It will provide the necessary information if you want to download a report for a specific term or website.

The 10 Best DataGrab Sites of 2023

1. MyDataProvider

Web scraping is a service that lets customers get information about any item listed on e-commerce websites that is sold in the main foreign markets. Customers can get product details, prices, and reviews for these items. Using the service, comparing prices between Amazon and other websites is possible. The service can be accessed from any device connected to the internet, like PCs, computers, tablets, and cell phones.

Information for product comparisons is quickly obtained when you request more information about a product. To make things easier for you and to help you in the future, all of the information about each product is saved in case you need to request more than one of your favorites. People who are short on time and need more time to conduct the time-consuming job of product study should use this service. Through MyDataProvider, it’s easy to keep track of the things you might be interested in.

2. No-Coding Data Scraper

This is an option to datagram. NoCoding Data Scraper is a simple web scraper application that may gather random data from other websites for use in several situations, such as SEO, partner marketing, and more. With just a few simple clicks, your data will be downloaded in minutes. The best thing about it is that people may use it without prior computing knowledge. The appropriate data is then immediately scraped by the program.

After the data is shown in a well-designed table or chart, the user can download or move it to Excel or another application to make changes. Using the application, a wide variety of data can be scraped, including text, phone numbers, email addresses, addresses, URLs, Facebook location information, and Twitter accounts, and more. NoCoding Data Scraper is a great program overall, and you should take it into account as a replacement.

3. Outscraper

Outscraper is more than just a scraper; it’s also a fully scalable data-mining tool that lets you make bots that can gather huge amounts of data independently. It can take HTML pages, words, and pictures from websites and put them elsewhere online. You can start scraping data from the Web 30 minutes after getting it because it’s so easy to use. One of the most important benefits is the ability to scrape data using regular expressions. This is an option to datagrab.

This means you have full control over what and where you scrape (for example, which fields you scrape). With object-oriented scraping, you may quickly reuse code to scrape many websites with the same structure. You can quickly change your extraction criteria by immediately changing out code from one extraction to another. Almost every part of the extraction process, including how Outscraper looks, can be changed.

4. ScrapeHunt

Utilizing the Web scraping database and API tool ScrapeHunt, you can save and reuse data that has been ground from any website. Its quick, high-performance design allows faster extraction and simpler usage than any other service. To search, the program will provide you with a list of plain-text URLs to scrape. Many work hours are saved because it provides the most full and correct online scraping data for application use.

It helps you track when certain goods or names are mentioned, find out more about websites, make lists of product groups, and make price comparison tools. To get data, browse the database or use the ScrapeHunt API. When you use the API, you send HTTP requests and get replies in JSON format. Developers who sign up on the website can make an API Key. The API Key must be in every API call.

5. ScraperBox

Thanks to a Web scraping API called ScraperBox, data can be gathered from various sources. With an easy-to-use interface, it speeds up the data collection process for developers. It makes setup simple to have a file that lists the data you want to collect. For various Web scraping tasks, it offers a useful and dependable HTTP API. ScraperBox can handle cookies, follow links, process HTML pages’ interactive forms, handle output encoding, and take care of cookies.

It can do so many things that you may use it for URL tracking, web development, and web design to get information from social networks or any other website and put it on your new website. The API is made to be flexible and easy to use so that any website can use it. Due to its support for multiple languages, it is ideal for working with foreign data such as news stories, product prices, and mood analysis. The most popular shopping websites, like Amazon, eBay, and Shopify, may also have information scraped.

6. ScrapingBee

This is an option to datagrab. ScrapingBee offers a Web Scraping API to collect information from websites. Using this tool, you may build data collection or export options for your business and collect information from the Web. This tool is great for market research, business information, product research, data mining, and making money on e-commerce sites. This information can be saved or analyzed, including website content, product descriptions, prices, and more.

Anyone can use the service, even though it’s made for businesses and developers. The API-based design allows users to add web scraping features to their existing apps. With proxies and verification, it gets data from the website safely. By saving website data on a safe server daily, regular server backups reduce the use of proxies and scraping bots. Whichever file you choose (JSON or CSV) gives you the data result.

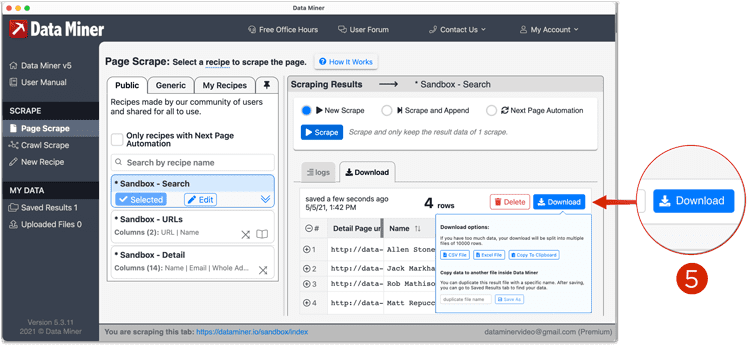

7. Data Miner

Data Miner is a web information extraction application that enables you to read, take web data from websites, and analyze the web data you collected. It takes data from a URL that you give it. By joining a URL from which you want to scrape web data and picking the choices that come up, the web scraping tool will do the rest for you.

Data Miner is designed to be quick and reliable so that it can be used to handle websites that don’t follow the rules, like those that don’t have clean URLs, put AJAX, JavaScript, and CSS in different folders, use the same ID for more than one thing, etc. It can be used right away or made bigger to fit certain needs. It uses custom classes that provide all the necessary steps toto pull data from a specific website. Data Miner is a great product you can consider as a replacement for.

8. ScrapingBot

We can easily get organized data from websites with the service of the Web Scraping API ScrapingBot. The tool makes you more productive by handling several tasks you do repeatedly and fixing mistakes you make often. The main idea is that you can start using this with just a few lines of code because it has a plain and simple Python API. Using ScrapingBot straight from the command line, you may plan web scrapes.

This is an option to datagrab. Let us do the hard work for you or add it to your application. It offers a simple way to describe common tasks and processes, making it very simple to keep data consistent even when many people are working on the same code base. Dynamic crawling and extractions, setting up regular extractions, and PDF files with extraction results and logs are some of the other features that can be used. It currently works with XPath, CSS, and Regex searches on HTML pages.

9. Data Excavator

Data Excavator is an online web scrape tool for e-commerce websites that gathers the data you require from any website. Using this, you can quickly and easily gather data from eCommerce sites like eBay, Amazon, Rakuten, Taobao, and others. You can get a lot of useful information about products with this tool. This information can help you understand trends and guess what they might be. Using the batch mode, you can quickly scrape multiple web shops. You can also get rid of all sizes of product pictures, extra information about the product, names, and versions. This is an option to datagrab.

A built-in browser saves time because it doesn’t need to automatically install extra browser tools when data extraction is done. Even if you are totally unaware that your data exists, full-text search can help you locate it. Making it simple to determine the product’s biggest size, the tool allows you to compare any two factors. You can crop and resize pictures in the Image Editor to see how different items compare.

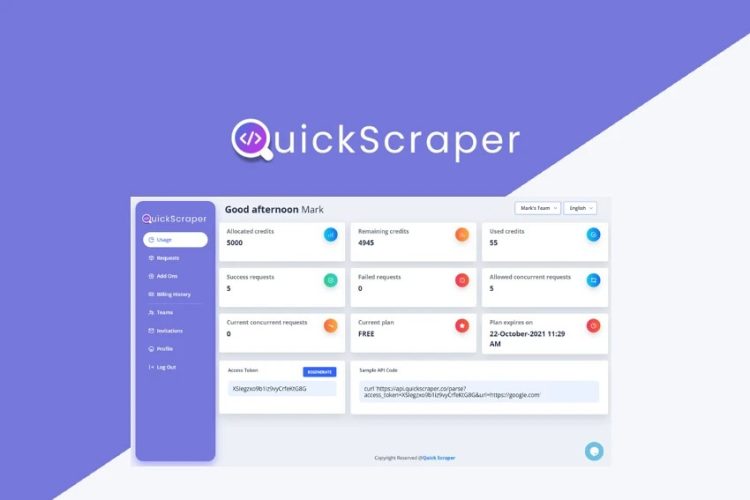

10. QuickScraper

The QuickScraper proxy API is strong and easy to use. It runs in your application and makes moving data from the Web to your database simple. You can easily describe scraping jobs and start and stop them whenever you want, so you don’t have to worry about the tool slowing down your application while it crawls. Making bots or scrapers by using your servers instead of other websites is possible.

The main benefit is that questions will look like they came from your servers, so websites that aren’t yours won’t be able to block your crawler. The second benefit is the ability to analyze and keep the scraped data on your servers. The ability to complete planned jobs in the past or the future is present in this crawler. Simple to use and customize, QuickScraper offers a clear layout. OverallQuickScraper is a great tool you should consider as a replacement.